Demystifying the MMU - Part I

Background

In each generation of computing hardware, be it mainframes or microcomputers, engineers started out by building less powerful computer hardware, and as technology improved and prices dropped, more powerful systems were built. And with each generation, computer users started out with single tasking and gradually transitioned to multi-tasking, as the hardware became powerful enough to run multiple applications simultaneously.

When computer hardware became powerful enough and users wanted to run more that one application simultaneously, Operating System designers had to build the required support in the Operating System. To keep things simple for application developers, Operating System designers had the following design goal in mind.

A application developer should not have to worry about, what other programs are running in the system. The application developer has to assume that the computer is completely available to the application program.

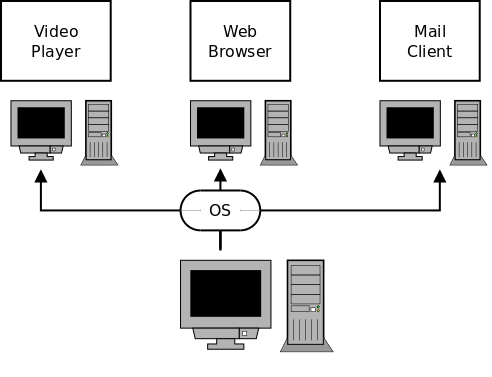

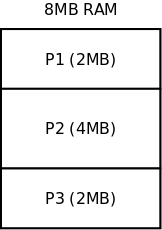

Multi-tasking operating systems achieve this is, by making a single computer appear as multiple computers, one for each application program. The following diagram shows 3 programs running on the system, and each program has an entire computer to itself.

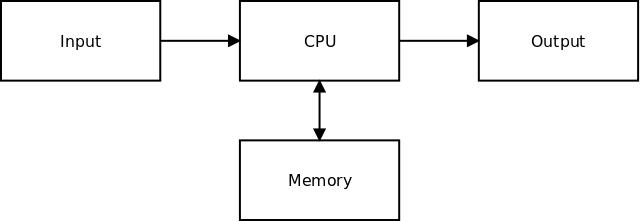

So how does the OS make a single computer appear as multiple computers? The basic building blocks of a computer are CPU, Memory and IO. The OS has to share these blocks between the running programs, in an appropriate manner. Most people are familiar with how the OS uses scheduling to share the CPU across running programs. This article discusses how the OS shares the memory across the running programs. Sharing of IO devices deserves an article of its own.

Process

Before we proceed, let us clarify the term process. A program in execution is called a process.

Process = Program + Run-time State (Global Data, Registers, Stack)

This is better understood with an example. In a system, there is only

one program called vi, the text editor. But we can have two vi

processes, one editing the file spam.txt and another editing the

file eggs.txt. In both the processes, the program is the same, but

executing programs have different run-time state, associated with

them.

Perils of Sharing

Sharing memory seems to be simple at first, since all that the OS has to do is allocate a portion of the memory for each process. But there are subtle issues with sharing memory:

-

The Problem of Protection

-

The Problem of Fragmentation

-

Support for Paging and Relocation

The Problem of Protection

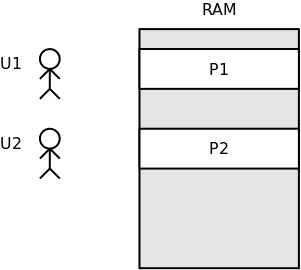

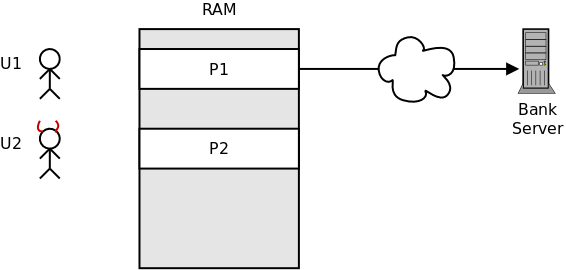

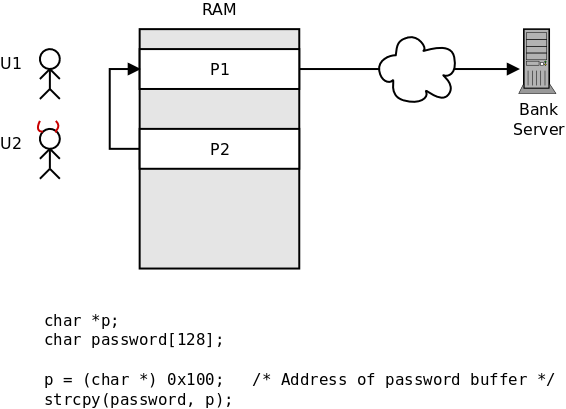

Consider a scenario in which two users U1 and U2 have started two processes P1 and P2, respectively.

User U1 is accessing some banking website in his process P1. User U2 is a malicious user, and wants to steal the password entered by P1.

Now, all that user U2 has to do is, write a program with a pointer pointing to a buffer in process P1, and copy the password from process P1, into his process P2.

The point we are trying to make is that, there is nothing that prevents process P2 from accessing P1’s code and data, and vice-versa. This is what we call the problem of protection.

The Problem of Fragmentation

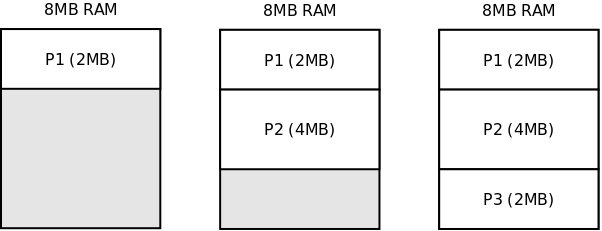

Consider a scenario in which a system has 8MB of RAM, process P1 is started, and it uses 2MB of memory. Next, process P2 is started, and it uses 4MB of memory. Finally, P3 is started, which uses 2MB of memory, and all the available memory is completely utilized.

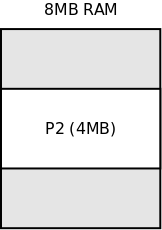

Now, process P1 and P3 terminates, leaving 4MB of free memory.

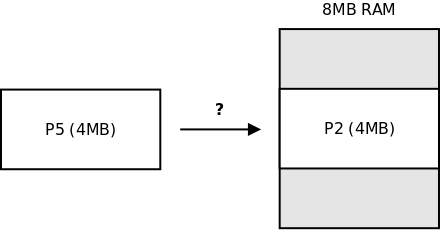

Now, the user wants to start process P5, that requires 4MB of memory. The question is whether process P5 can execute, in the current state of the system?

The answer is it cannot. This is because a process requires 4MB of contiguous memory to execute. The operating system cannot take a process, slice it, and put the pieces at different locations and expect the program to execute. For one, a program can contain arrays, which by definition is a sequence of data items placed in contiguous memory locations. If the operating system cuts right through an array and places the bits in different locations, the array access will fail. The same holds true with slicing through functions as well.

So in the current scenario, we have a situation where we have sufficient memory to execute the program, but we are unable to do so because the memory is not contiguous. This is what we call the problem of fragmentation.

Support for Swapping and Relocation

While computer systems, generally have limited primary memory, they have abundant secondary storage. A technique commonly used by OSes is to move out processes that are not currently executing to secondary storage (swap-out) and move them back to primary memory (swap-in) when they need to execute. This way more programs can be simultaneously executing in the system. The space on disk where the program is swapped-out is called the swap space. This technique is in general called Swapping.

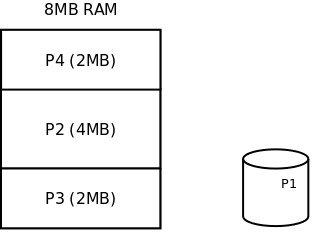

Let’s understand the effect of swapping in a system, and their implications in the implementation of Operating Systems. Consider a system which has 8MB of RAM and there are 3 processes P1, P2 and P3, which have utilized all the available RAM.

Process P1 is a server, and is just waiting for requests to arrive from a client. So it is not really doing much. When process P4 is to be executed, process P1 can be swapped-out to disk.

P4 utilizes the memory that is freed out, and starts executing.

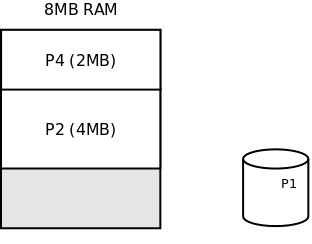

Now, process P3 terminates, freeing up some memory.

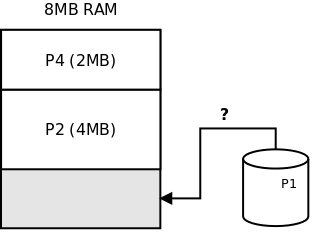

Now, process P1 receives a request from the client and needs to execute. The question is can we load P1 into the memory that was freed up by P3? The complication is that P1 was running at the top of the RAM when it was swapped-out, but is now to be swapped-in to a different location.

The answer is it cannot. This is because, the process P1 might contain pointer variables that where pointing to the process' previous location in memory. All these pointers will become invalid, when the process is loaded to a different location. It is not possible for the OS to patch these pointers to point to the new address. In general, it is not possible for a process to hop from one address to another (AKA relocate), in the middle of its execution.

MMU and Sharing

As we have seen, sharing the computer’s memory between processes is non-trivial, and brings with it a host of issues. To solve these issues, the Operating System designers needed additional support from the hardware, and a new hardware block called the Memory Management Unit (MMU) was added. Before we understand how these problems were solved by the MMU, we need to understand the function of the MMU. This and more will be covered in Part-II of this article series.

Related Workshops

-

Upcoming Workshop: "Linux Kernel Porting Workshop", Sep 10-11 2016

-

Get notified about upcoming workshops by registering at https://erp.zilogic.com/training-enquiry